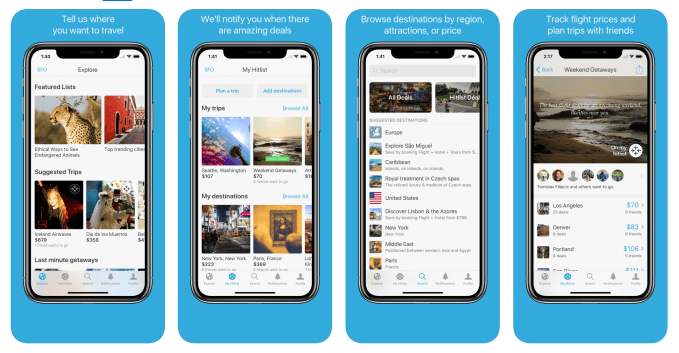

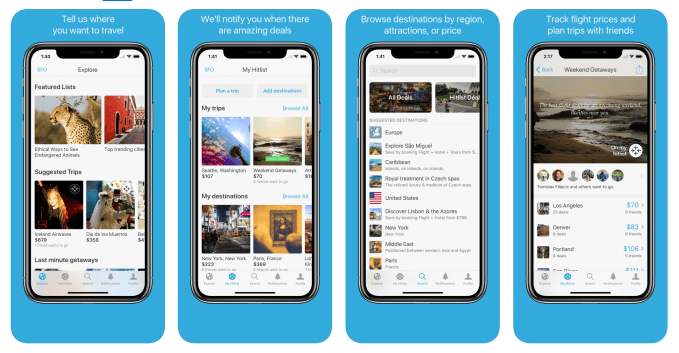

Hitlist, a several-years old app for finding cheap flights has begun rolling out a subscription tier that will turn it into something more akin to your own mobile travel agent. While the core app experience which monitor airlines for flight deals will continue to be free, the new premium upgrade will unlock a handful of other useful features, including advanced filtering, exclusive members-only fares, and even custom travel advice from the Hitlist team.

The idea, says founder and CEO Gillian Morris, goes back to the original idea that inspired her to create Hitlist in the first place.

“Going back to the very beginning, Hitlist was essentially me giving travel advice to friends,” she says. “People had the time, inclination, and money to travel, but didn’t book because they got lost in the search process. When I sent custom advice, like ‘you said you wanted to go to Istanbul, there are $500 direct round trips in May available right now, that’s a good price and the weather will be good and the tulip festival, this unique cultural experience, will be happening’ – 4 out of 5 people would book,” Morris explains.

“I wouldn’t be able to scale that level of advice at the beginning, so we focused on just the flight deals. But now we have four years’ worth of data that we can learn from – browsing and searching within Hitlist – and we can start to build more sophisticated models that will inside and enable people to travel at scale,” she says.

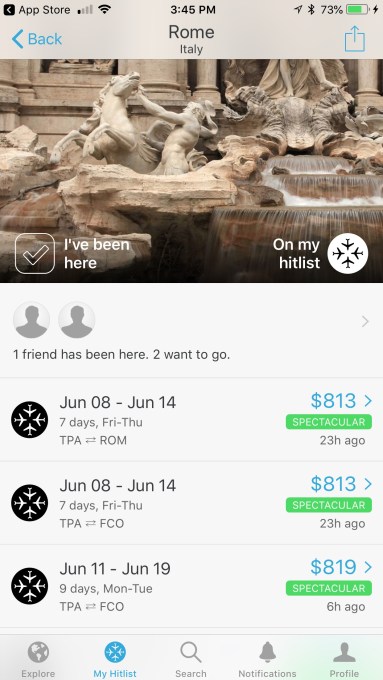

The new subscription feature will offer users the ability to better filter airline deals by things like the carrier, number of stops, and the time of day of both the departure and return.

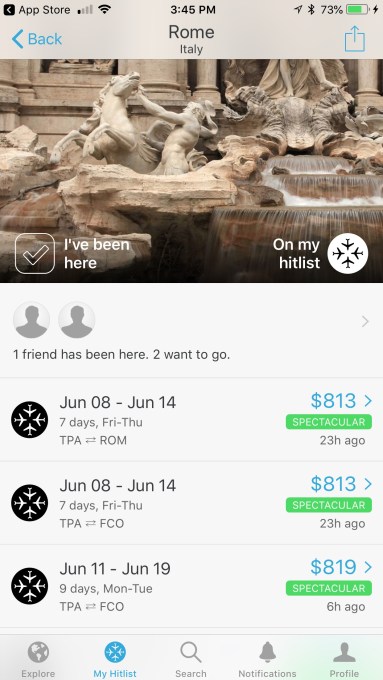

It’s also working with airlines to market “closed group” fares that aren’t accessible through flight search engines, but are available to select travel agents and other resellers that market to a closed user group. These will be flagged in the app as “members-only” fares.

Hitlist says it’s currently working with one airline and, through a third party, with several more. But because this is still in a pilot phase and is only live with select users, it can’t say which.

Meanwhile, the app will continue to focus on helping users find the best, low-cost fares – not only by tracking deals – but also by bundling low-cost carriers and traditional airlines together. However, it won’t promote dates that are likely to be cancelled by airlines, nor will it venture into legally gray areas like skipping legs of a flight (like Skiplagged) to find cheaper fares.

Beyond just finding cheap flights – which remains a competitive space – Hitlist aims to offer users a more personalized experience, more like what you would have gotten with a travel agent in the past.

Beyond just finding cheap flights – which remains a competitive space – Hitlist aims to offer users a more personalized experience, more like what you would have gotten with a travel agent in the past.

For starters, it developed a proprietary machine learning algorithm that sorts through over 50 million fares’ worth of data per day to find deals that appeal to each individual user. It also learns from how you use it – browsing flights, or how you react to alerts, for example.

“The app gets to know you better over time, just like a human travel agent would,” says Morris. “With the premium upgrade, we’re gaining more insight to the traveler’s preferences that helps us to develop even more sophisticated A.I. to provide advice and make sure you’re getting the best deal.”

When you find a flight you like, Hitlist will direct you over to a partner’s site – like the airline or online travel agency such as CheapOair.

Where the app differs from others who are also trying to replace the travel agent – like Lola, Pana or Hyper – is that Hitlist doesn’t offer a chat interface. Morris feels that ultimately, travelers don’t want to talk to a chatbot – they just want to browse and discover, then have an experience that’s tailored for them as the app gets smarter about what they like.

That’s where Hitlist’s editorially curated suggestions come in, which can be as broad as “escape to Mexico” or as weird and quirky as “best cities to find wild kittens.” (Yes really.)

Hitlist will also help travelers by offering a variety of travel advice to help them make a decision – similar to how Morris used to advise her friends. For example, it might suggest the best days to fly (similar to Google Flights or Hopper), or tell you about the baggage fees, or even what sort of events might be happening at a destination.

Since its launch, Hitlist has grown to over a million mostly millennial travelers, who have collectively saved over $25 million on their flights by booking at the right time.

The new subscription plan is live now on iOS as an in-app purchase for $4.99 per month, but offers a better rate for quarterly or annual subscriptions, at $4.00/mo and $3/mo, respectively. It will roll out on Android later in the year.

Source: Tech Crunch

Beyond just finding cheap flights – which remains a competitive space – Hitlist aims to offer users a more personalized experience, more like what you would have gotten with a travel agent in the past.

Beyond just finding cheap flights – which remains a competitive space – Hitlist aims to offer users a more personalized experience, more like what you would have gotten with a travel agent in the past.