Eight Microsoft interns have developed a new language learning tool that uses the smartphone camera to help adults improve their English literacy by learning the words for the things around them. The app, Read My World, lets you take a picture with your phone to learn from a library of over 1,500 words. The photo can be of a real-world object or text found in a document, Microsoft says.

The app is meant to either supplement formal classroom training or offer a way to learn some words for those who didn’t have the time or funds to participate in a language learning class.

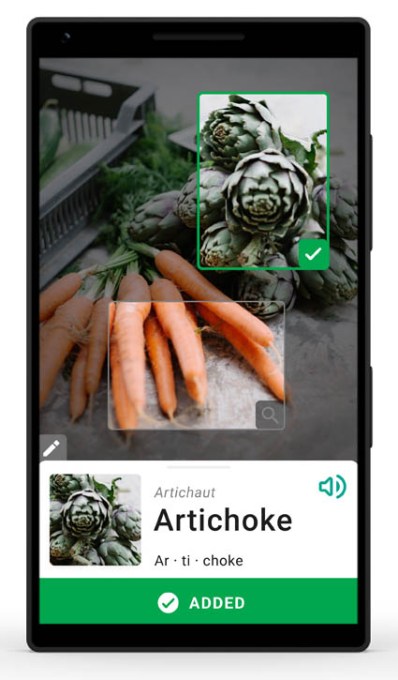

Instead of lessons, users are encouraged to snap photos of the things they encounter in their everyday lives.

“Originally, we were planning more of a lesson plan style approach, but through our research and discovery, we realized a Swiss army knife might be more useful,” said Nicole Joyal, a software developer intern who worked on the project. “We wound up building a tool that can help you throughout your day-to-day rather than something that teaches,” she said.

Read My World uses a combination of Microsoft Cognitive Services and Computer Vision APIs to identify the objects in photos. It will then show the word’s spelling and speak the phonetic pronunciation of the identified vocabulary words. The photos corresponding to the identified words can also be saved to a personal dictionary in the app for later reference.

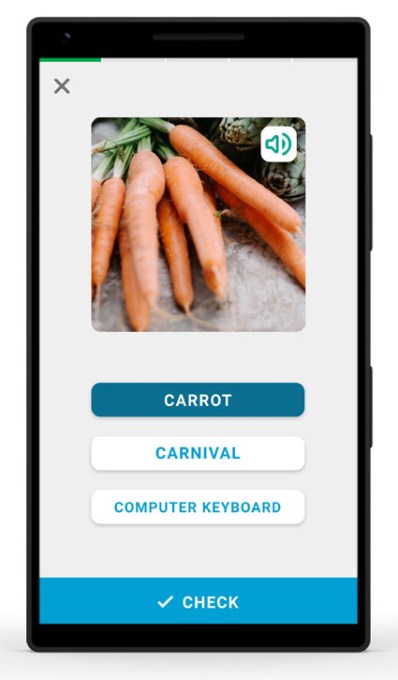

Finally, the app encourages users to practice their newly discovered words by way of three built-in vocabulary games.

The’s 1,500-word vocabulary may seem small, but it’s actually close to the number of words foreign language learners are able to pick up through traditional study. According to a report from the BBC, for instance, many language learners struggle to learn more than 2,000 to 3,000 words even after years of study. In fact, one study in Taiwan found that after 9 years of learning a foreign language, students failed to learn the most frequently-used 1,000 words.

The report also stressed that it was most important to pick up the words used day-to-day.

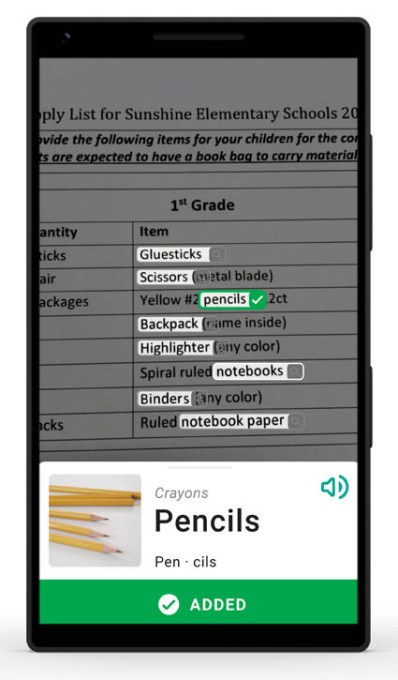

Because the app focuses on things you see, it’s limited in terms of replacing formal instruction. After gathering feedback from teachers and students who tested an early version, the team rolled out a feature to detect words in documents too. It’s not a Google Lens-like experience, where written words are translated into your own language — rather, select words it can identify are highlighted so you can hear how they sound, and see a picture so you know what it is.

For example, the app pointed at a student’s school supply list may pick out words like pencils, notebooks, scissors, and binders.

The app, a project from Microsoft’s in-house incubator Microsoft Garage, will initially be made available for testing and feedback for select organizations. Those who work with low literacy communities at an NGO or nonprofit, can request an invitation to join the experiment by filling out a form.

Source: Tech Crunch