William ("Whurley") Hurley

Contributor

William Hurley, commonly known as whurley, is an American entrepreneur and the founder of Chaotic Moon Studios, Honest Dollar, and Equals: The Global Partnership for Gender Equality in the Digital Age. He is currently chairing the Quantum Computing Working Group for the IEEE Standards Association (IEEE-SA), and is the founder and chief executive of

Strangeworks.

The word “quantum” gained currency in the late 20th century as a descriptor signifying something so significant, it defied the use of common adjectives. For example, a “quantum leap” is a dramatic advancement (also an early ’90’s television series starring Scott Bakula).

At best, that is an imprecise (though entertaining) definition. When “quantum” is applied to “computing,” however, we are indeed entering an era of dramatic advancement.

Quantum computing is technology based on the principles of quantum theory, which explains the nature of energy and matter on the atomic and subatomic level. It relies on the existence of mind-bending quantum-mechanical phenomena, such as superposition and entanglement.

Erwin Schrödinger’s famous 1930’s thought experiment involving a cat that was both dead and alive at the same time was intended to highlight the apparent absurdity of superposition, the principle that quantum systems can exist in multiple states simultaneously until observed or measured. Today quantum computers contain dozens of qubits (quantum bits), which take advantage of that very principle. Each qubit exists in a superposition of zero and one (i.e., has non-zero probabilities to be a zero or a one) until measured. The development of qubits has implications for dealing with massive amounts of data and achieving previously unattainable level of computing efficiency that are the tantalizing potential of quantum computing.

While Schrödinger was thinking about zombie cats, Albert Einstein was observing what he described as “spooky action at a distance,” particles that seemed to be communicating faster than the speed of light. What he was seeing were entangled electrons in action. Entanglement refers to the observation that the state of particles from the same quantum system cannot be described independently of each other. Even when they are separated by great distances, they are still part of the same system. If you measure one particle, the rest seem to know instantly. The current record distance for measuring entangled particles is 1,200 kilometers or about 745.6 miles. Entanglement means that the whole quantum system is greater than the sum of its parts.

If these phenomena make you vaguely uncomfortable so far, perhaps I can assuage that feeling simply by quoting Schrödinger, who purportedly said after his development of quantum theory, “I don’t like it, and I’m sorry I ever had anything to do with it.”

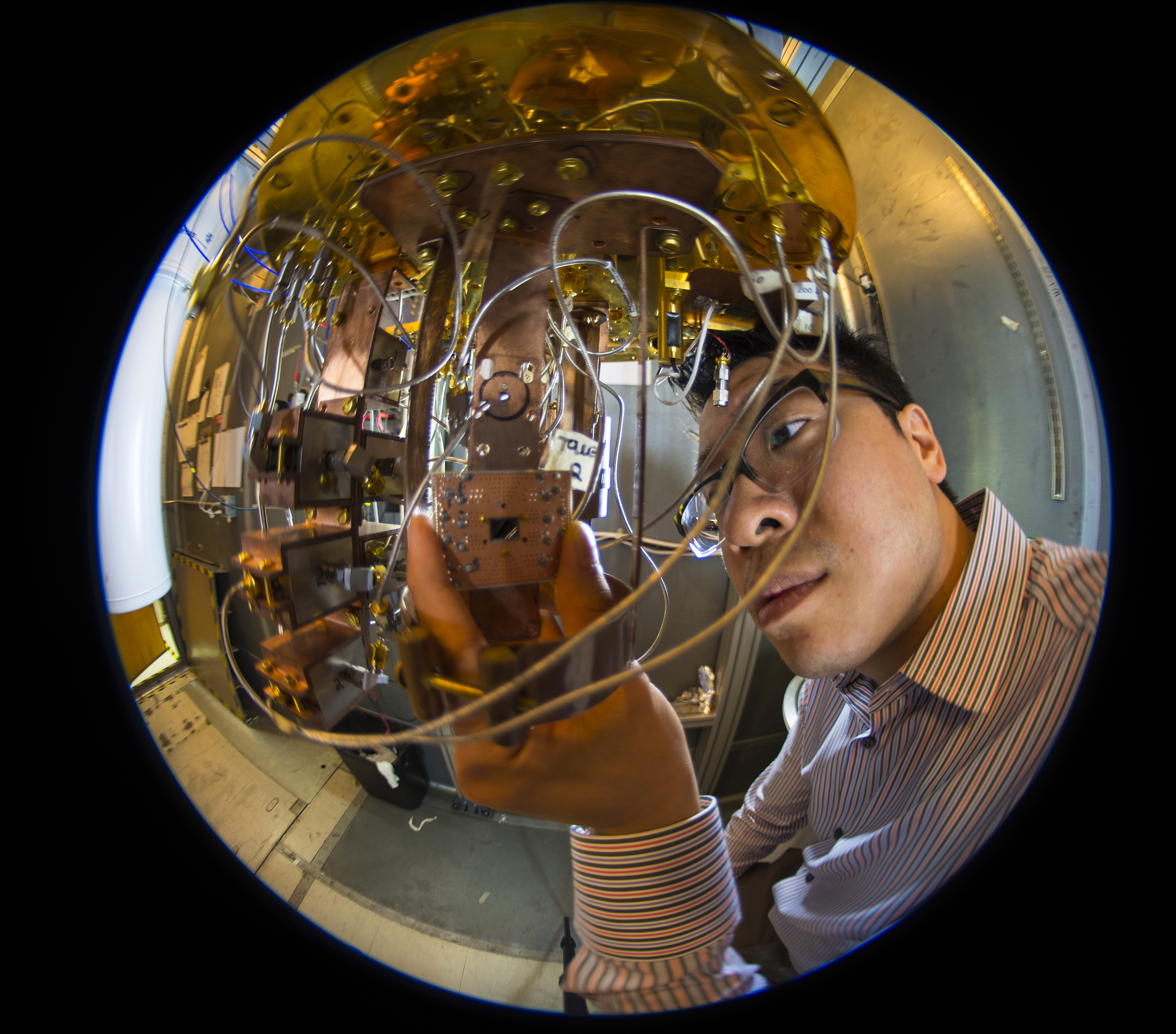

Various parties are taking different approaches to quantum computing, so a single explanation of how it works would be subjective. But one principle may help readers get their arms around the difference between classical computing and quantum computing. Classical computers are binary. That is, they depend on the fact that every bit can exist only in one of two states, either 0 or 1. Schrödinger’s cat merely illustrated that subatomic particles could exhibit innumerable states at the same time. If you envision a sphere, a binary state would be if the “north pole,” say, was 0, and the south pole was 1. In a qubit, the entire sphere can hold innumerable other states and relating those states between qubits enables certain correlations that make quantum computing well-suited for a variety of specific tasks that classical computing cannot accomplish. Creating qubits and maintaining their existence long enough to accomplish quantum computing tasks is an ongoing challenge.

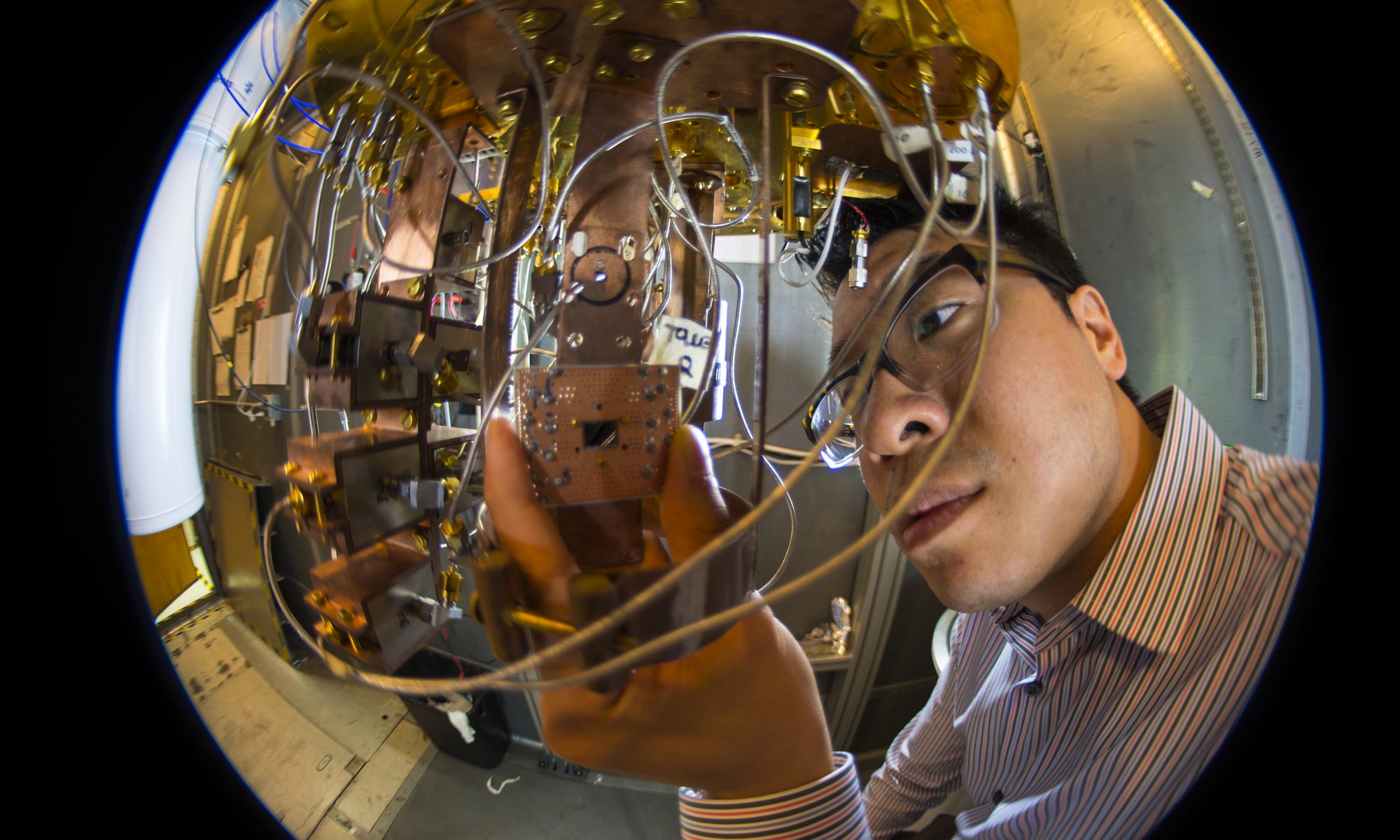

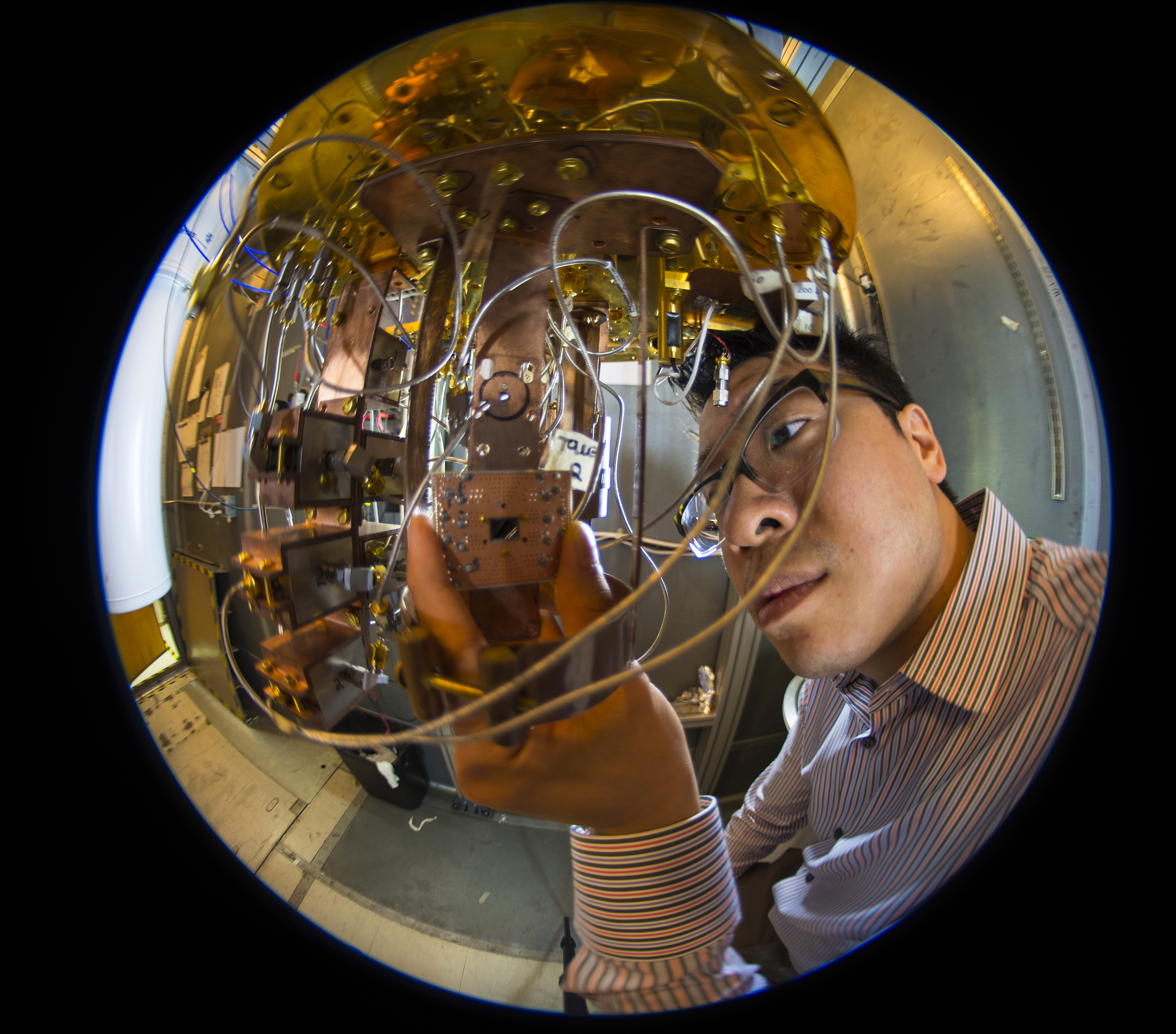

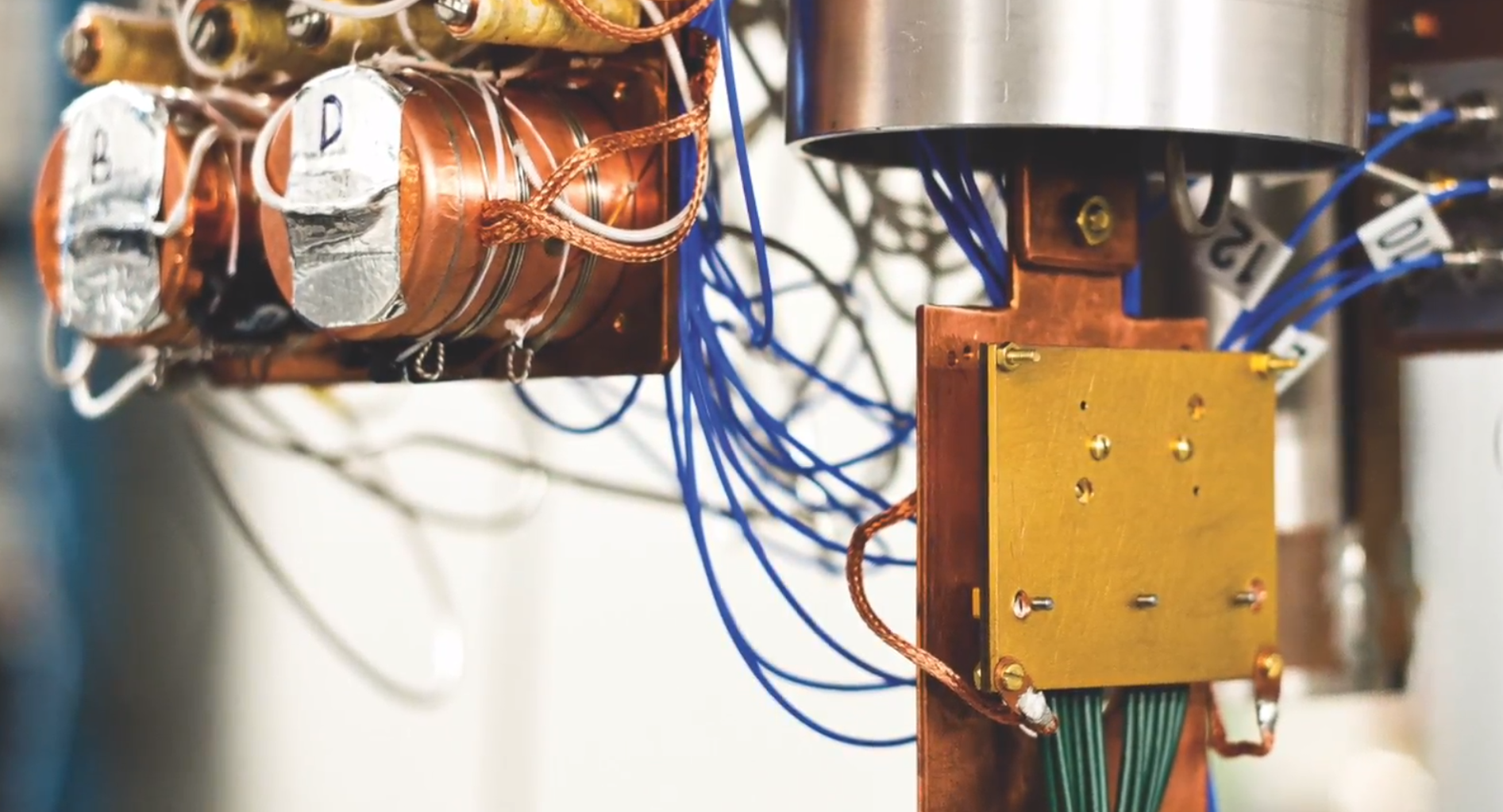

IBM researcher Jerry Chow in the quantum computing lab at IBM’s T.J. Watson Research Center.

Humanizing Quantum Computing

These are just the beginnings of the strange world of quantum mechanics. Personally, I’m enthralled by quantum computing. It fascinates me on many levels, from its technical arcana to its potential applications that could benefit humanity. But a qubit’s worth of witty obfuscation on how quantum computing works will have to suffice for now. Let’s move on to how it will help us create a better world.

Quantum computing’s purpose is to aid and extend the abilities of classical computing. Quantum computers will perform certain tasks much more efficiently than classical computers, providing us with a new tool for specific applications. Quantum computers will not replace their classical counterparts. In fact, quantum computers require classical computer to support their specialized abilities, such as systems optimization.

Quantum computers will be useful in advancing solutions to challenges in diverse fields such as energy, finance, healthcare, aerospace, among others. Their capabilities will help us cure diseases, improve global financial markets, detangle traffic, combat climate change, and more. For instance, quantum computing has the potential to speed up pharmaceutical discovery and development, and to improve the accuracy of the atmospheric models used to track and explain climate change and its adverse effects.

I call this “humanizing” quantum computing, because such a powerful new technology should be used to benefit humanity, or we’re missing the boat.

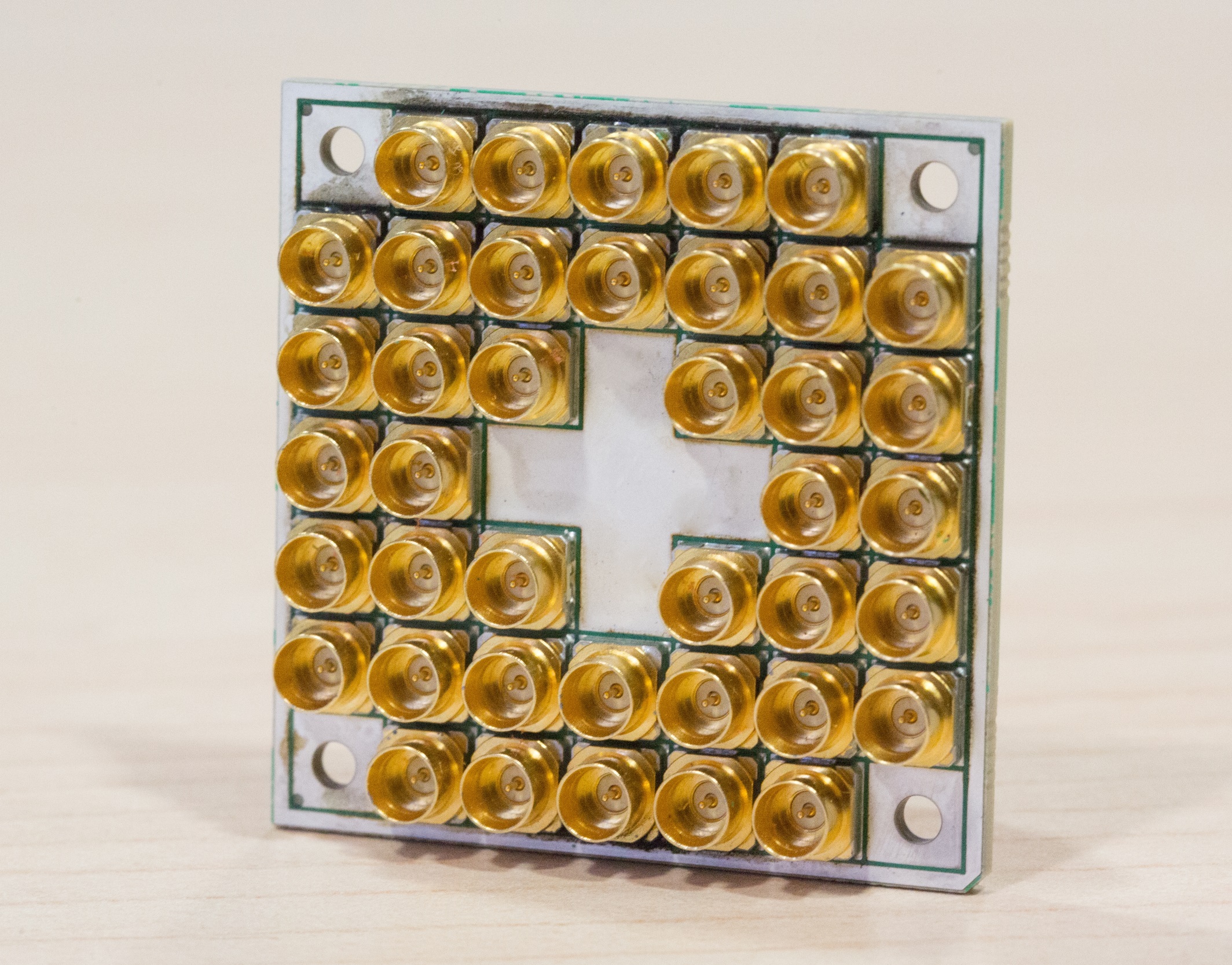

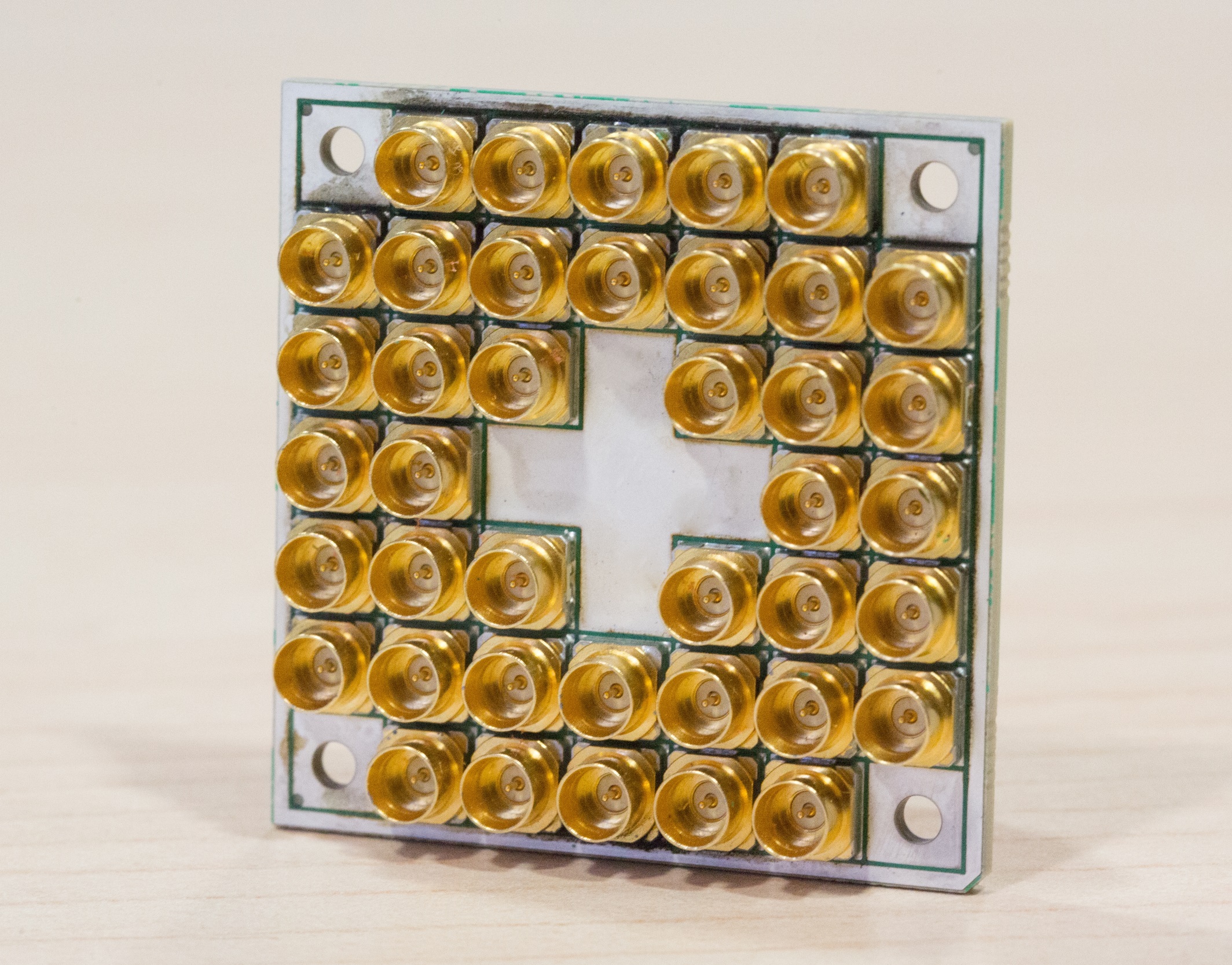

Intel’s 17-qubit superconducting test chip for quantum computing has unique features for improved connectivity and better electrical and thermo-mechanical performance. (Credit: Intel Corporation)

An Uptick in Investments, Patents, Startups, and more

That’s my inner evangelist speaking. In factual terms, the latest verifiable, global figures for investment and patent applications reflect an uptick in both areas, a trend that’s likely to continue. Going into 2015, non-classified national investments in quantum computing reflected an aggregate global spend of about $1.75 billion USD,according to The Economist. The European Union led with $643 million. The U.S. was the top individual nation with $421 million invested, followed by China ($257 million), Germany ($140 million), Britain ($123 million) and Canada ($117 million). Twenty countries have invested at least $10 million in quantum computing research.

At the same time, according to a patent search enabled by Thomson Innovation, the U.S. led in quantum computing-related patent applications with 295, followed by Canada (79), Japan (78), Great Britain (36), and China (29). The number of patent families related to quantum computing was projected to increase 430 percent by the end of 2017

The upshot is that nations, giant tech firms, universities, and start-ups are exploring quantum computing and its range of potential applications. Some parties (e.g., nation states) are pursuing quantum computing for security and competitive reasons. It’s been said that quantum computers will break current encryption schemes, kill blockchain, and serve other dark purposes.

I reject that proprietary, cutthroat approach. It’s clear to me that quantum computing can serve the greater good through an open-source, collaborative research and development approach that I believe will prevail once wider access to this technology is available. I’m confident crowd-sourcing quantum computing applications for the greater good will win.

If you want to get involved, check out the free tools that the household-name computing giants such as IBM and Google have made available, as well as the open-source offerings out there from giants and start-ups alike. Actual time on a quantum computer is available today, and access opportunities will only expand.

In keeping with my view that proprietary solutions will succumb to open-source, collaborative R&D and universal quantum computing value propositions, allow me to point out that several dozen start-ups in North America alone have jumped into the QC ecosystem along with governments and academia. Names such as Rigetti Computing, D-Wave Systems, 1Qbit Information Technologies, Inc., Quantum Circuits, Inc., QC Ware, Zapata Computing, Inc. may become well-known or they may become subsumed by bigger players, their burn rate – anything is possible in this nascent field.

Developing Quantum Computing Standards

Another way to get involved is to join the effort to develop quantum computing-related standards. Technical standards ultimately speed the development of a technology, introduce economies of scale, and grow markets. Quantum computer hardware and software development will benefit from a common nomenclature, for instance, and agreed-upon metrics to measure results.

Currently, the IEEE Standards Association Quantum Computing Working Group is developing two standards. One is for quantum computing definitions and nomenclature so we can all speak the same language. The other addresses performance metrics and performance benchmarking to enable measurement of quantum computers’ performance against classical computers and, ultimately, each other.

The need for additional standards will become clear over time.

Source: Tech Crunch