TVs this year will ship with a new feature called “filmmaker mode,” but unlike the last dozen things the display industry has tried to foist on consumers, this one actually matters. It doesn’t magically turn your living room into a movie theater, but it’s an important step in that direction.

This new setting arose out of concerns among filmmakers (hence the name) that users were getting a sub-par viewing experience of the media that creators had so painstakingly composed.

The average TV these days is actually quite a quality piece of kit compared to a few years back. But few ever leave their default settings. This was beginning to be a problem, explained LG’s director of special projects, Neil Robinson, who helped define the filmmaker mode specification and execute it on the company’s displays.

“When people take TVs out of the box, they play with the settings for maybe five minutes, if you’re lucky,” he said. “So filmmakers wanted a way to drive awareness that you should have the settings configured in this particular way.”

In the past they’ve taken to social media and other platforms to mention this sort of thing, but it’s hard to say how effective a call to action is, even when it’s Tom Cruise and Chris McQuarrie begging you:

While very few people really need to tweak the gamma or adjust individual color levels, there are a couple settings that are absolutely crucial for a film or show to look the way it’s intended. The most important are ones that fit under the general term “motion processing.”

These settings have a variety of fancy-sounding names, like “game mode,” “motion smoothing,” “truemotion,” and such like, and they are on by default on many TVs. What they do differs from model to model, but it amounts to taking content at, say, 24 frames per second, and converting it to content at, say, 120 frames per second.

Generally this means inventing the images that come between the 24 actual frames — so if a person’s hand is at point A in one frame of a movie and point C in the next, motion processing will create a point B to go in between — or B, X, Y, Z, and dozens more if necessary.

This is bad for several reasons:

First, it produces a smoothness of motion that lies somewhere between real life and film, giving an uncanny look to motion-processed imagery that people often say reminds them of bad daytime TV shot on video — which is why people call it the “soap opera effect.”

Second, some of these algorithms are better than others, and some media is more compatible than the rest (sports broadcasts, for instance). While at best they produce the soap opera effect, at worst they can produce weird visual artifacts that can distract even the least sensitive viewer.

And third, it’s an aesthetic affront to the creators of the content, who usually crafted it very deliberately, choosing this shot, this frame rate, this shutter speed, this take, this movement, and so on with purpose and a careful eye. It’s one thing if your TV has the colors a little too warm or the shadows overbright — quite another to create new frames entirely with dubious effect.

So filmmakers, and in particular cinematographers, whose work crafting the look of the movie is most affected by these settings, began petitioning TV companies to either turn motion processing off by default or create some kind of easily accessible method for users to disable it themselves.

Ironically, the option already existed on some displays. “Many manufacturers already had something like this,” said Robinson. But with different names, different locations within the settings, and different exact effects, no user could really be sure what these various modes actually did. LG’s was “Technicolor Expert Mode.” Does that sound like something the average consumer would be inclined to turn on? I like messing with settings, and I’d probably keep away from it.

So the movement was more about standardization than reinvention. With a single name, icon, and prominent placement instead of being buried in a sub-menu somewhere, this is something people may actually see and use.

Not that there was no back-and-forth on the specification itself. For one thing, filmmaker mode also lowers the peak brightness of the TV to a relatively dark 100 nits — at a time when high brightness, daylight visibility, and contrast ratio are specs manufacturers want to show off.

The reason for this is, very simply, to make people turn off the lights.

There’s very little anyone in the production of a movie can do to control your living room setup or how you actually watch the film. But restricting your TV to certain levels of brightness does have the effect of making people want to dim the lights and sit right in front. Do you want to watch movies in broad daylight, with the shadows pumped up so bright they look grey? Feel free, but don’t imagine that’s what the creators consider ideal conditions.

Photo: Chris Ryan / Getty Images

“As long as you view in a room that’s not overly bright, I’d say you’re getting very close to what the filmmakers saw in grading,” said Robinson. Filmmaker mode’s color controls are a rather loose, he noted, but you’ll get the correct aspect ratio, white balance, no motion processing, and generally no weird surprises from not delving deep enough in the settings.

The full list of changes can be summarized as follows:

- Maintain source frame rate and aspect ratio (no stretched or sped up imagery)

- Motion processing off (no smoothing)

- Peak brightness reduced (keeps shadows dark — this may change with HDR content)

- Sharpening and noise reduction off (standard items with dubious benefit)

- Other “image enhancements” off (non-standard items with dubious benefit)

- White point at D65/6500K (prevents colors from looking too warm or cool)

All this, however, relies on people being aware of the mode and choosing to switch to it. Exactly how that will work depends on several factors. The ideal option is probably a filmmaker mode button right on the clicker, which is at least theoretically the plan.

The alternative is a content specification — as opposed to a display one — that allows TVs to automatically enter filmmaker mode when a piece of media requests it to. But this requires content providers to take advantage of the APIs that make the automatic switching possible, so don’t count on it.

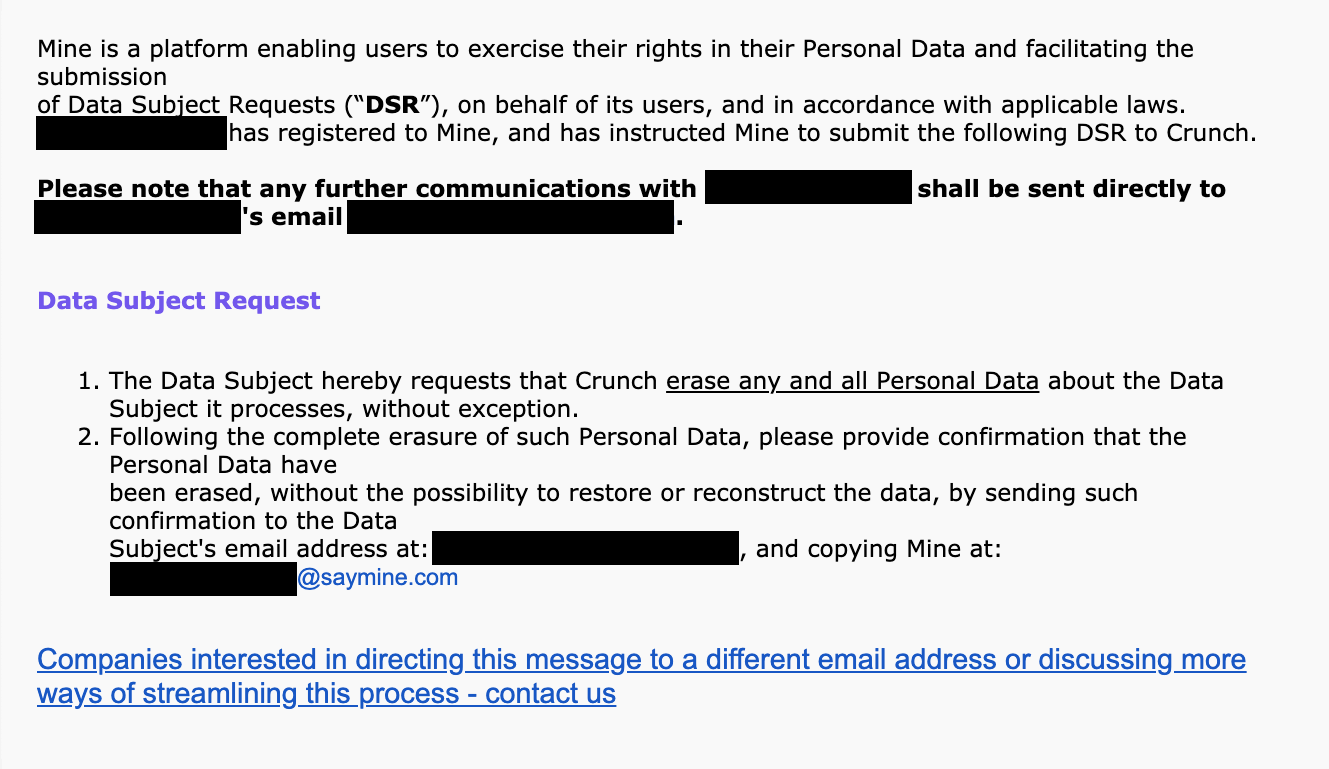

And of course this has its own difficulties, including privacy concerns — do you really want your shows to tell your devices what to do and when? So a middle road where the TV prompts the user to “Show this content in filmmaker mode? Yes/No” and automatic fallback to the previous settings afterwards might be the best option.

There are other improvements that can be pursued to make home viewing more like the theater, but as Robinson pointed out, there are simply fundamental differences between LCD and OLED displays and the projectors used in theaters — and even then there are major differences between projectors. But that’s a whole other story.

At the very least, the mode as planned represents a wedge that content purists (it has a whiff of derogation but they may embrace the term) can widen over time. Getting the average user to turn off motion processing is the first and perhaps most important step — everything after that is incremental improvement.

So which TVs will have filmmaker mode? It’s unclear. LG, Vizio, and Panasonic have all committed to bringing models out with the feature, and it’s even possible it could be added to older models with a software update (but don’t count on it). Sony is a holdout for now. No one is sure exactly which models will have filmmaker mode available, so just cast an eye over the spec list of you’re thinking of getting and, if you’ll take my advice, don’t buy a TV without it.

Source: Tech Crunch