It’s a bit strange to hear that the world’s leading social network is pursuing research in robotics rather than, say, making search useful, but Facebook is a big organization with many competing priorities. And while these robots aren’t directly going to affect your Facebook experience, what the company learns from them could be impactful in surprising ways.

Though robotics is a new area of research for Facebook, its reliance on and bleeding-edge work in AI are well known. Mechanisms that could be called AI (the definition is quite hazy) govern all sorts of things, from camera effects to automated moderation of restricted content.

AI and robotics are naturally overlapping magisteria — it’s why we have an event covering both — and advances in one often do the same, or open new areas of inquiry, in the other. So really it’s no surprise that Facebook, with its strong interest in using AI for a variety of tasks in the real and social media worlds, might want to dabble in robotics to mine for insights.

What then could be the possible wider applications of the robotics projects it announced today? Let’s take a look.

Learning to walk from scratch

“Daisy” the hexapod robot.

Walking is a surprisingly complex action, or series of actions, especially when you’ve got six legs, like the robot used in this experiment. You can program in how it should move its legs to go forward, turn around, and so on, but doesn’t that feel a bit like cheating? After all, we had to learn on our own, with no instruction manual or settings to import. So the team looked into having the robot teach itself to walk.

This isn’t a new type of research — lots of roboticists and AI researchers are into it. Evolutionary algorithms (different but related) go back a long way, and we’ve already seen interesting papers like this one:

By giving their robot some basic priorities like being “rewarded” for moving forward, but no real clue how to work its legs, the team let it experiment and try out different things, slowly learning and refining the model by which it moves. The goal is to reduce the amount of time it takes for the robot to go from zero to reliable locomotion from weeks to hours.

What could this be used for? Facebook is a vast wilderness of data, complex and dubiously structured. Learning to navigate a network of data is of course very different from learning to navigate an office — but the idea of a system teaching itself the basics on a short timescale given some simple rules and goals is shared.

Learning how AI systems teach themselves, and how to remove roadblocks like mistaken priorities, cheating the rules, weird data-hoarding habits and other stuff is important for agents meant to be set loose in both real and virtual worlds. Perhaps the next time there is a humanitarian crisis that Facebook needs to monitor on its platform, the AI model that helps do so will be informed by the autodidactic efficiencies that turn up here.

Leveraging “curiosity”

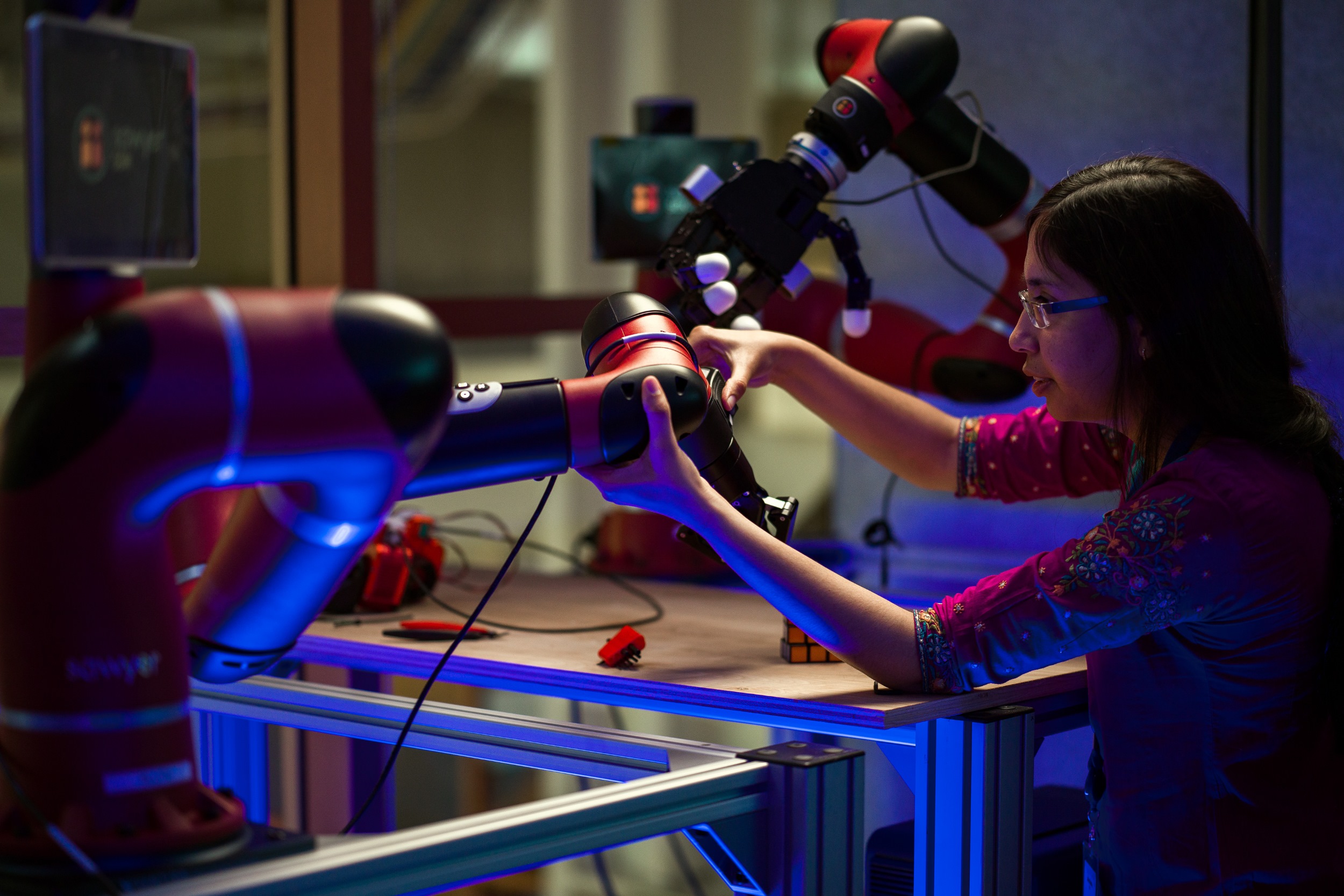

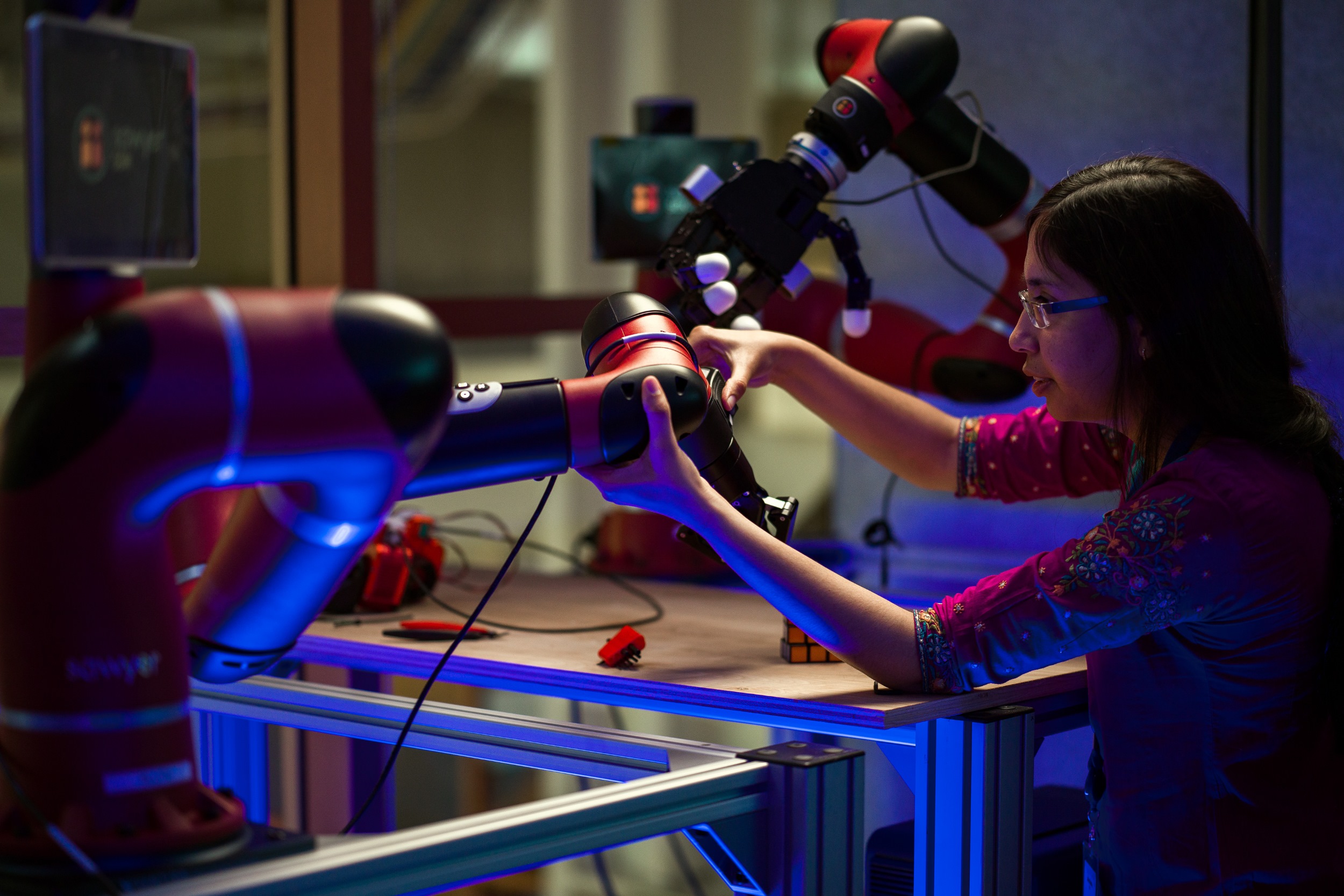

Researcher Akshara Rai adjusts a robot arm in the robotics AI lab in Menlo Park. (Facebook)

This work is a little less visual, but more relatable. After all, everyone feels curiosity to a certain degree, and while we understand that sometimes it kills the cat, most times it’s a drive that leads us to learn more effectively. Facebook applied the concept of curiosity to a robot arm being asked to perform various ordinary tasks.

Now, it may seem odd that they could imbue a robot arm with “curiosity,” but what’s meant by that term in this context is simply that the AI in charge of the arm — whether it’s seeing or deciding how to grip, or how fast to move — is given motivation to reduce uncertainty about that action.

That could mean lots of things — perhaps twisting the camera a little while identifying an object gives it a little bit of a better view, improving its confidence in identifying it. Maybe it looks at the target area first to double check the distance and make sure there’s no obstacle. Whatever the case, giving the AI latitude to find actions that increase confidence could eventually let it complete tasks faster, even though at the beginning it may be slowed by the “curious” acts.

What could this be used for? Facebook is big on computer vision, as we’ve seen both in its camera and image work and in devices like Portal, which (some would say creepily) follows you around the room with its “face.” Learning about the environment is critical for both these applications and for any others that require context about what they’re seeing or sensing in order to function.

Any camera operating in an app or device like those from Facebook is constantly analyzing the images it sees for usable information. When a face enters the frame, that’s the cue for a dozen new algorithms to spin up and start working. If someone holds up an object, does it have text? Does it need to be translated? Is there a QR code? What about the background, how far away is it? If the user is applying AR effects or filters, where does the face or hair stop and the trees behind begin?

If the camera, or gadget, or robot, left these tasks to be accomplished “just in time,” they will produce CPU usage spikes, visible latency in the image, and all kinds of stuff the user or system engineer doesn’t want. But if it’s doing it all the time, that’s just as bad. If instead the AI agent is exerting curiosity to check these things when it senses too much uncertainty about the scene, that’s a happy medium. This is just one way it could be used, but given Facebook’s priorities it seems like an important one.

Seeing by touching

Although vision is important, it’s not the only way that we, or robots, perceive the world. Many robots are equipped with sensors for motion, sound, and other modalities, but actual touch is relatively rare. Chalk it up to a lack of good tactile interfaces (though we’re getting there). Nevertheless, Facebook’s researchers wanted to look into the possibility of using tactile data as a surrogate for visual data.

If you think about it, that’s perfectly normal — people with visual impairments use touch to navigate their surroundings or acquire fine details about objects. It’s not exactly that they’re “seeing” via touch, but there’s a meaningful overlap between the concepts. So Facebook’s researchers deployed an AI model that decides what actions to take based on video, but instead of actual video data, fed it high-resolution touch data.

If you think about it, that’s perfectly normal — people with visual impairments use touch to navigate their surroundings or acquire fine details about objects. It’s not exactly that they’re “seeing” via touch, but there’s a meaningful overlap between the concepts. So Facebook’s researchers deployed an AI model that decides what actions to take based on video, but instead of actual video data, fed it high-resolution touch data.

Turns out the algorithm doesn’t really care whether it’s looking at an image of the world as we’d see it or not — as long as the data is presented visually, for instance as a map of pressure on a tactile sensor, it can be analyzed for patterns just like a photographic image.

What could this be used for? It’s doubtful Facebook is super interested in reaching out and touching its users. But this isn’t just about touch — it’s about applying learning across modalities.

Think about how, if you were presented with two distinct objects for the first time, it would be trivial to tell them apart with your eyes closed, by touch alone. Why can you do that? Because when you see something, you don’t just understand what it looks like, you develop an internal model representing it that encompasses multiple senses and perspectives.

Similarly, an AI agent may need to transfer its learning from one domain to another — auditory data telling a grip sensor how hard to hold an object, or visual data telling the microphone how to separate voices. The real world is a complicated place and data is noisier here — but voluminous. Being able to leverage that data regardless of its type is important to reliably being able to understand and interact with reality.

So you see that while this research is interesting in its own right, and can in fact be explained on that simpler premise, it is also important to recognize the context in which it is being conducted. As the blog post describing the research concludes:

We are focused on using robotics work that will not only lead to more capable robots but will also push the limits of AI over the years and decades to come. If we want to move closer to machines that can think, plan, and reason the way people do, then we need to build AI systems that can learn for themselves in a multitude of scenarios — beyond the digital world.

As Facebook continually works on expanding its influence from its walled garden of apps and services into the rich but unstructured world of your living room, kitchen, and office, its AI agents require more and more sophistication. Sure, you won’t see a “Facebook robot” any time soon… unless you count the one they already sell, or the one in your pocket right now.

Source: Tech Crunch

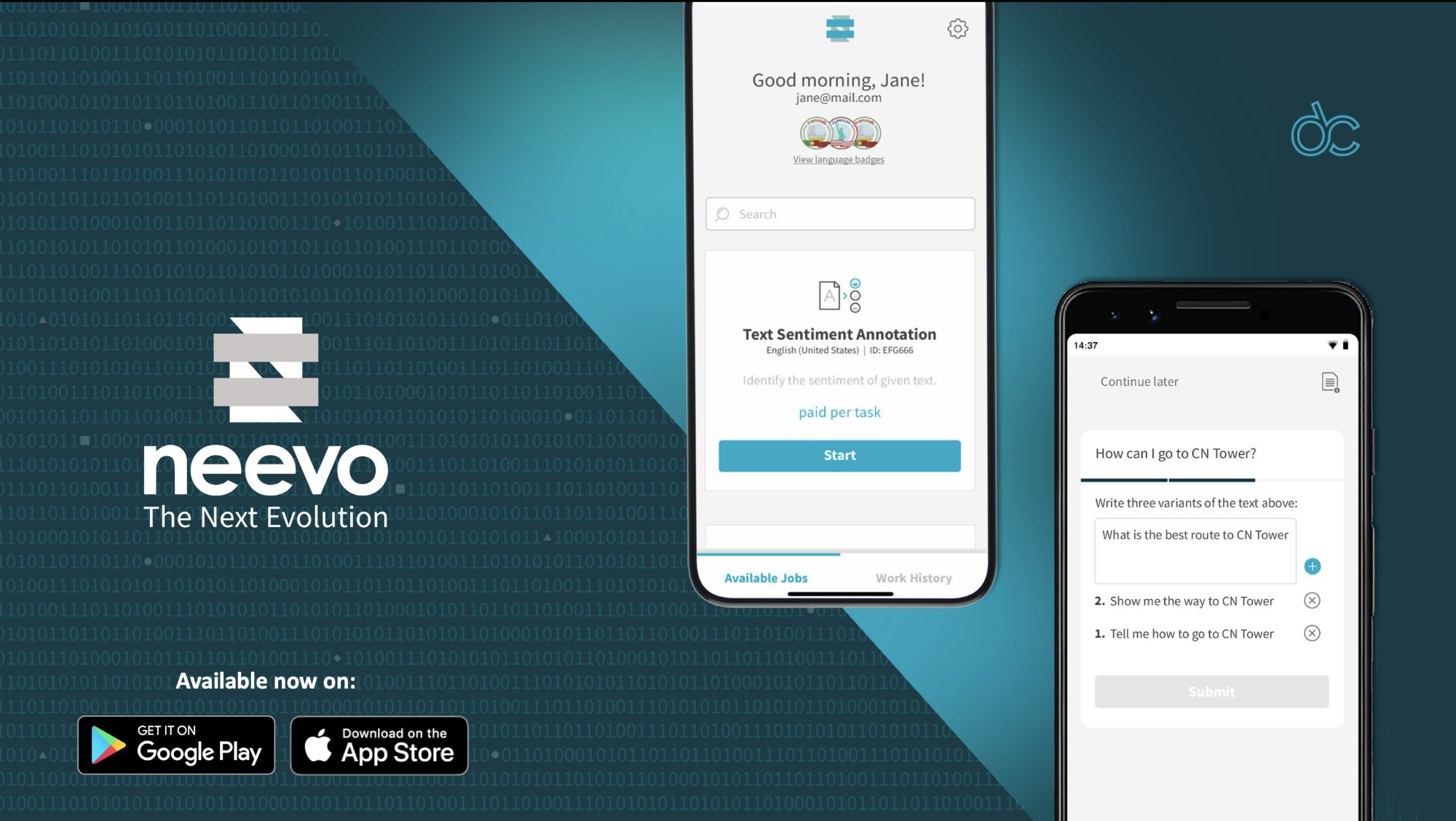

To that end DefinedCrowd has made its own app, which shares the Neevo branding of the company’s annotation community, that lets its annotators work whenever they want, tackling image or speech annotation tasks on the go. It’s available on iOS and Android starting today.

To that end DefinedCrowd has made its own app, which shares the Neevo branding of the company’s annotation community, that lets its annotators work whenever they want, tackling image or speech annotation tasks on the go. It’s available on iOS and Android starting today.

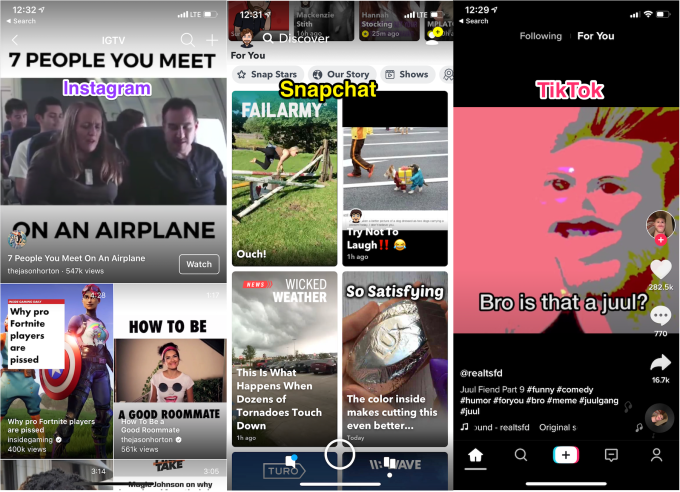

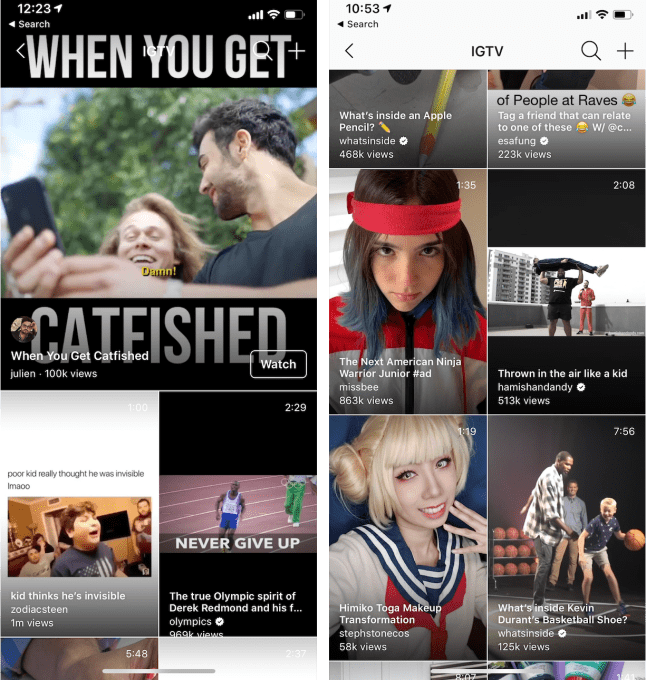

IGTV has ditched its category-based navigation system’s tabs like “For You”, “Following”, “Popular”, and “Continue Watching” for just one central feed of algorithmically suggested videos — much like TikTok. This affords a more lean-back, ‘just show me something fun’ experience that relies on Instagram’s AI to analyze your behavior and recommend content instead of putting the burden of choice on the viewer.

IGTV has ditched its category-based navigation system’s tabs like “For You”, “Following”, “Popular”, and “Continue Watching” for just one central feed of algorithmically suggested videos — much like TikTok. This affords a more lean-back, ‘just show me something fun’ experience that relies on Instagram’s AI to analyze your behavior and recommend content instead of putting the burden of choice on the viewer.